Best underestimate cubic fitting

Use quadratic programming to fit a cubic polynomial below a curve

I was recently confronted with a problem: Given some curve or collection of data points, determine the polynomial that fits closest to the data but lies completely below it.

The solution I came up with: Use quadratic programming!

Quadratic programming is the mathematical process of solving optimization problems involving quadratic functions and linear constraints. In particular, they come in the form:

$$ \textrm{minimize } \frac{1}{2} x^T Q x + c^T x \ \textrm{ subject to } Ax \preceq b $$

If you think about least squares, you can convert least squares to a quadratic programming problem. This allows us to add constraints, namely the constraint that our solution must lie below the curve.

After we formalize the problem and write some code to use an off-the-shelf quadratic program solver, in this case quadprog, we have a solution right away.

import quadprog

import numpy as np

def fit_cubic(x, y):

# use different powers for a different degree polynomial

A = np.[.(, 3),.(),,.(len())]

G = A.T @ A

a = A.T @ -y

C = A.T

b = -y

coeffs = quadprog.(,,,)[0]

return -coeffsIt’s beautifully short because we rely on quadprog to work the magic.

To test, we can simulate a curve using the function below:

import scipy.stats as ss

def random_hills(x, num_hills, width_distribution):

centers = np.random.(.(),

.(),

size=)

widths = np.random.([0],

[1],

size=)

components = [ss.(,)

for center, width in zip(,)]

weights = np.random.(0, 1, size=)

weights = weights / np.() # ensuring they sum to 1

return np.([.() *

for, in zip(,)], axis=0)It’s just building a Gaussian mixture model manually given num_hills Gaussians with widths drawn from the width_distribution over the input domain x.

The width_distribution = (width_mean, width_standard_deviation).

Then, our script to solve this is:

# replace with whatever curve you have

x = np.(0, 30, 100)

y =(, 50, (1, 1.5))*10 + np.(/17)

# then fit!

cubic_coeffs =(,)We can quickly visualize this with Matplotlib.

import matplotlib.pyplot as plt

fig, ax = plt.()

ax.(,, label="data")

ax.(,.(,),

c='r',

label="fitted cubic polynomial")

fig.()

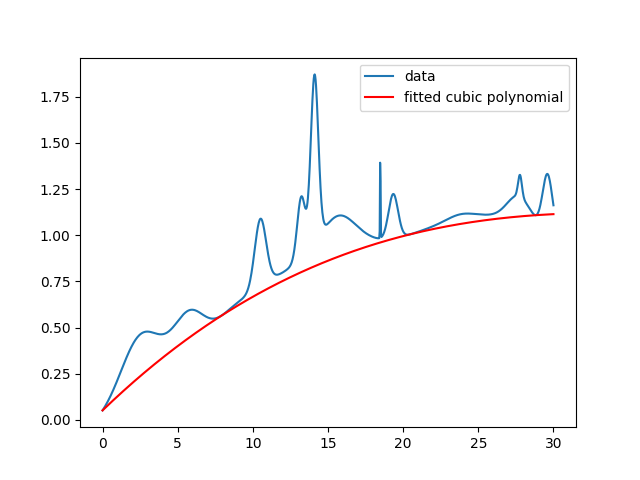

fig.()Then, we get something like:

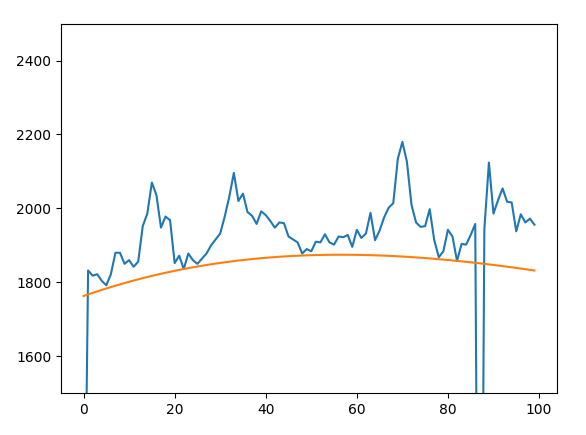

In my particular case, I’m working with noisy data. So there will sometimes be major outliers stemming from bad pixels in images. My quick solution is just to throw out outliers less than three standard deviations by filtering the input $x$ and $y$. This results in a fit like the following:

We’re using this to build better F-corona models for PUNCH.

More to come on that!

All together, the script is:

import quadprog

import numpy as np

import scipy.stats as ss

import matplotlib.pyplot as plt

def fit_cubic(x, y):

# use different powers for a different degree polynomial

A = np.[.(, 3),.(),,.(len())]

y = y

G = A.T @ A

a = A.T @ -y

C = A.T

b = -y

coeffs = quadprog.(,,,)[0]

return -coeffs

def random_hills(x, num_hills, width_distribution):

centers = np.random.(.(),.(), size=)

widths = np.random.([0],[1], size=)

components = [ss.(,) for center, width in zip(,)]

weights = np.random.(0, 1, size=)

weights = weights / np.() # ensuring they sum to 1

return np.([.() * for, in zip(,)], axis=0)

if __name__ == "__main__":

x = np.(0, 30, 1000)

y =(, 50, (1, 1.5)) * 10 + np.( / 17) # or whatever curve you want

cubic_coeffs =(,)

fig, ax = plt.()

ax.(,, label="data")

ax.(,.(,), c='r', label="fitted cubic polynomial")

ax.()

plt.()